The Effects of Problem-Based, Project-Based, and Case-Based Learning on Students’ Motivation: a Meta-Analysis

- META-ANALYSIS

- Open access

- Published: 28 February 2024

- Volume 36 , article number 29 , ( 2024 )

Cite this article

You have full access to this open access article

- Lisette Wijnia ORCID: orcid.org/0000-0001-7395-839X 1 , 2 ,

- Gera Noordzij ORCID: orcid.org/0000-0001-6213-2066 3 ,

- Lidia R. Arends ORCID: orcid.org/0000-0001-7111-752X 4 , 5 ,

- Remigius M. J. P. Rikers ORCID: orcid.org/0000-0002-4722-1455 1 &

- Sofie M. M. Loyens ORCID: orcid.org/0000-0002-2419-1492 1

16k Accesses

17 Altmetric

Explore all metrics

In this meta-analysis, we examined the effects on students’ motivation of student-centered, problem-driven learning methods compared to teacher-centered/lecture-based learning. Specifically, we considered problem-based (PBL), project-based (PjBL), and case-based learning (CBL). We viewed motivation as a multifaceted construct consisting of students’ beliefs (competence and control beliefs), perceptions of task value (interest and importance), and reasons for engaging in tasks (intrinsic or extrinsic). In addition, we included students’ attitudes toward school subjects (e.g., science). We included 139 subsamples from the 132 included reports (83 PBL, 37 PjBL, and 19 CBL subsamples). Overall, PBL, PjBL, and CBL had a small to moderate, heterogeneous positive effect ( d = 0.498) on motivation. Moderator analyses revealed that larger effect sizes were found for students’ beliefs, values, and attitudes compared to students’ reasons for studying. No differences were found between the three instructional methods on motivation. However, effect sizes were larger when problem-driven learning was applied in a single course (when compared to a curriculum-level approach). Larger effects were also found in some academic domains (i.e., healthcare and STEM) than in others. While the impact of problem-driven learning on motivation is generally positive, the intricate interplay of factors such as academic domain and implementation level underscores the need for a nuanced approach to leveraging these instructional methods effectively with regard to increasing student motivation.

Similar content being viewed by others

Supporting student motivation in class: the motivational potential of tasks

Is problem-based learning associated with students’ motivation? A quantitative and qualitative study

Effective Learning Behavior in Problem-Based Learning: a Scoping Review

Avoid common mistakes on your manuscript.

Over recent decades, there has been a significant shift from teacher-centered to student-centered learning, emphasizing learners’ active role in learning and knowledge construction (Hoidn & Klemenčič, 2021 ; Loyens et al., 2022 ). One notable approach within this trend is problem-driven learning, which employs real-world problems to engage learners (Dolmans, 2021 ; Hung, 2015 ). This approach encompasses problem-based learning (PBL), project-based learning (PjBL), and case-based learning (CBL).

There is a prevailing belief that such student-centered, problem-driven methods enhance motivation (e.g., Harackiewicz et al., 2016 ; Hmelo-Silver, 2004 ; Larmer et al., 2015 ; Wijnia & Servant-Miklos, 2019 ). In particular, centering the learning process around real-world problems is thought to make learning more interesting and relevant to students’ lives and future professions (e.g., Barrows, 1996 ; Blumenfeld et al., 1991 ; Schmidt et al., 2011 ). Additionally, the facilitative role of teachers in student-centered learning could support students’ need for autonomy and subsequent motivation (Black & Deci, 2000 ; Ryan & Deci, 2000b , 2020 ). However, research has identified motivational challenges, including lack of participation and problems during group work (Blumenfeld et al., 1996 ; Dolmans & Schmidt, 2006 ; Dolmans et al., 2001 ), and mixed results regarding motivation compared to teacher-centered approaches (e.g., Sungur & Tekkaya, 2006 ; Wijnia et al., 2011 ).

In this meta-analysis, we aim to comprehensively examine the impact of PBL, PjBL, and CBL on student motivation. It is important to gain a deeper understanding of the overall effect of these student-centered, problem-driven learning methods, given their popularity and the assumed positive effects on student outcomes, such as motivation. The current literature suggests that effects on motivation can vary, and although the use of real-world problems and the guiding role of teachers are potential motivators, motivational challenges often arise in these learning methods. Therefore, we want to identify the different conditions and characteristics that determine how and when problem-driven learning affects motivation, by considering the role of various factors, such as educational level, duration, and level of implementation. The insights derived from this meta-analysis can empower educators and policymakers in designing and promoting effective instructional methods that support student motivation. We will begin by defining student motivation and then explore how PBL, PjBL, and CBL can influence it.

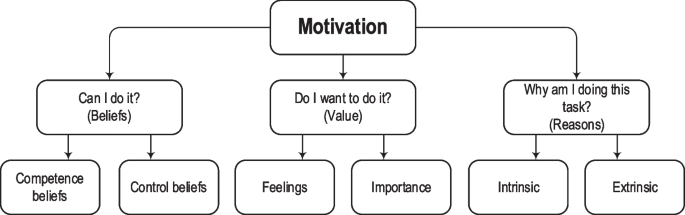

Motivation, derived from the Latin word for “to move,” provides the energy and direction for people’s actions (Eccles et al., 1998 ; Ryan et al., 2019 ). It is one of the most studied constructs in educational psychology (Koenka, 2020 ) and several major motivation theories have been established in the past decades (see Urhahne & Wijnia, 2023 ). Across these theories, motivation is generally viewed as a multifaceted construct, often defined as “a process in which goal-directed activity is instigated and sustained” (Pintrich & Schunk, 1996 , p. 4; Schunk et al., 2014 , p. 5; see also Linnenbrink-Garcia & Patall, 2016 ; Urhahne & Wijnia, 2023 ). In their overview, Graham and Weiner ( 2012 ) used three basic motivation questions—“Can I do it?,” “Do I want to do it?” and “Why am I doing this task?”—to categorize the constructs within these different theories (for similar attempts, see Eccles et al., 1998 ; Pintrich, 2003a ).

Can I Do It?

Students’ beliefs about their abilities and control over learning are central to their academic performance (Pintrich, 2003a ). Skinner ( 1996 ) identified three types of beliefs: means–ends relations, agent–means relations, and agent–ends relations. Beliefs about means–ends relations involve students’ perceptions of factors influencing school success, such as ability, effort, others, or chance (Skinner, 1996 ). This meta-analysis refers to means–ends beliefs as “control beliefs.” Control of learning beliefs relate to the belief in one’s ability to control success through effort rather than external factors such as chance (Duncan & McKeachie, 2005 ), similar to the concept of “locus of control” (Rotter, 1966 ; Skinner, 1996 ), where an internal locus of control or student-related causes (effort and ability) are contrasted with an external locus of control or non-student-related causes (chance or teacher). Perceptions of internal control are more conducive to learning than perceptions of external control (Pintrich, 2003a ).

The second type, agent–means relations, encompasses students’ competence beliefs, including self-efficacy, expectancy, and perceived competence (Skinner, 1996 ). Self-efficacy refers specifically to students’ confidence about organizing and executing specific school tasks (Bandura, 1997 , 2006 ). However, self-efficacy measures often include items focusing on perceived chances of future success (i.e., expectancy for success; Wigfield & Eccles, 2000 ) and feelings of competence (Schunk & DiBenedetto, 2020 ), a broadening of the original concept.

The third type, agent–ends beliefs, addresses students’ beliefs about their influence on their own success, including the belief that they can intentionally attain desired outcomes or prevent undesirable ones (Skinner, 1996 ). Examples include Bandura’s outcome expectations, expressing students’ estimate that their actions will lead to specific outcomes (Bandura, 1977 , 1997 ). Some self-efficacy measures also incorporate outcome expectancies, making it challenging to separate these constructs (Graham & Weiner, 2012 ; Klassen & Usher, 2010 ; Williams, 2010 ). This meta-analysis categorizes agent–means and agent–ends beliefs as “competence beliefs.”

Do I Want to Do It?

“Do I want to do it?” reflects students’ perceptions of task value, a crucial aspect within the context of (situated) expectancy–value theory (Eccles & Wigfield, 2020 ; Graham & Weiner, 2012 ; Wigfield & Eccles, 2000 ). Task value encompasses four primary person–task factors: (a) intrinsic or interest value, (b) attainment value, (c) utility value, and (d) cost (Eccles & Wigfield, 2020 ; Eccles et al., 1983 ; Wigfield & Eccles, 2000 ).

Intrinsic/interest value pertains to the anticipated enjoyment or subjective interest associated with the task (Eccles, 2005 ; Eccles & Wigfield, 2020 ). Attainment value connects task performance with personal identity, highlighting the significance of doing well on a task. Utility value focuses on the task’s usefulness for future goals, such as job prospects. Additionally, each task carries perceived costs (e.g., effort, time, anxiety), leading students to avoid tasks if the costs outweigh the benefits.

Interest value closely aligns with individual interest from interest theory (Hidi & Renninger, 2006 ; Krapp, 2002 ; Renninger & Hidi, 2011 ; Wigfield & Cambria, 2010 ), which Schiefele ( 1991 , 1996 ) divided into feeling-related (enjoyment) and value-related (personal significance) components. Building upon this perspective, we distinguish between the feelings-related aspect (i.e., enjoyment, subjective interest) and the importance-related element of task value (i.e., attainment and utility values; for a similar approach, see Durik et al., 2006 ; Watt et al., 2012 ). This differentiation provides a nuanced understanding of the multifaceted nature of task value, offering insights into students’ task perceptions in problem-driven learning.

Why Am I Doing This Task?

“Why am I doing this task?” refers to students’ reasons for engaging in an activity (Graham & Weiner, 2012 ; Pintrich, 2003a ). Two popular theories within this approach are achievement goal theory (Dweck & Leggett, 1988 ; Elliot & McGregor, 2001 ; Senko et al., 2011 ; Urdan & Kaplan, 2020 ) and self-determination theory (Deci & Ryan, 1985 , 2000 ; Ryan & Deci, 2000a , b , 2017 , 2020 ).

Achievement goal theory distinguishes between mastery and performance goals (Elliot & McGregor, 2001 ; Senko et al., 2011 ; Urdan & Kaplan, 2020 ). Mastery goal orientation focuses on learning and skill/task mastery, while performance goal orientation centers on getting good grades or approval from others. Mastery goals used to be viewed as more beneficial for student behavior. However, mixed results regarding the association of performance goals with achievement have led to further refinements of the theory, such as the inclusion of an approach–avoidance dimension (Elliot & McGregor, 2001 ) and additional adjustments (Elliot et al., 2011 ; Senko et al., 2011 ).

Self-determination theory is another prominent theory, and distinguishes between autonomous and controlled motivation (Deci & Ryan, 1985 , 2000 ; Ryan & Deci, 2017 , 2020 ). Autonomously motivated students experience psychological freedom and perform activities out of interest (intrinsic motivation) or personal importance (identified motivation). Controlled motivation concerns feelings of pressure from within, such as shame or guilt (introjected motivation) or from external demands (external motivation). Autonomous motivation is associated with more favorable student outcomes than controlled motivation (Howard et al., 2021 ).

The concept of intrinsic motivation within self-determination theory differs from interest value (Eccles & Wigfield, 2020 ) and interest (Hidi, 2006 ; Renninger & Hidi, 2022 ). Intrinsic motivation focuses on the origin of students’ decision to engage in a task, driven by their beliefs. In contrast, interest value concerns the source of the activity’s value (Eccles, 2005 ) and involves a person’s interaction with the environment (Renninger & Hidi, 2022 ). This distinction is crucial in our current meta-analysis.

Regarding students’ reasons for studying, we classify them as either “intrinsic” (comprising mastery goals and autonomous motivation) or “extrinsic” (encompassing performance goals and controlled motivation). This distinction is made because most studies in our meta-analysis treated students’ reasons for studying as a dichotomous construct (i.e., intrinsic vs. extrinsic). Furthermore, prior research has revealed strong, positive correlations between autonomous motivation and mastery goals and moderate, positive correlations between controlled motivation and performance goals (Assor et al., 2009 ; Howard et al., 2021 ). Figure 1 provides a visual overview of the motivation categories used in our meta-analysis.

Overview of motivation constructs in this meta-analysis

Our current meta-analysis also includes attitudes toward school subjects. An attitude signifies a person’s overall positive or negative evaluations of engaging in specific activities (Ajzen, 1991 ; Germann, 1988 ). Attitudes are often measured in the context of science, technology, engineering, and mathematics (STEM) subjects (Osborne et al., 2003 ; Potvin & Hasni, 2014 ). There is no agreed-upon definition of attitude toward STEM or other school subjects. It can encompass a range of factors. Some attitude measures focus primarily on students’ enjoyment/interest (e.g., During science class, I usually am interested; Germann, 1988 ), while others argue it can also encompass perceptions of the importance and usefulness of the subject, competence beliefs, and career interests (e.g., Fraser, 1981 ; Hilton et al., 2004 ; Osborne et al., 2003 ; Potvin & Hasni, 2014 ). Given the overlap with motivation concepts, we chose to incorporate both motivation and attitudes in our analysis to achieve a more comprehensive understanding of the impacts of problem-driven learning. Specifically, we included attitude measures in our meta-analysis if their items and subscales shared conceptual similarities with the constructs outlined in Fig. 1 (namely, competence beliefs, importance, and feelings-related values such as interest/enjoyment). Additionally, we included a subcategory gauging students’ future interest in pursuing a course or a career linked to the respective school subject, labeled as “future interest.”

Student-Centered, Problem-Driven Learning and Motivation

Student-centered, problem-driven learning pedagogies share four key principles: learning should be (a) contextual, (b) constructive, (c) collaborative, and (d) self-directed (Dolmans, 2021 ). Contextual learning concerns engaging with real-world problems that are professionally or socially relevant. Constructive learning involves creating your understanding by connecting new information with what you already know (Loyens et al., 2022 ). In the collaborative component, students collectively discuss, reason, and build on each other’s learning (Dolmans, 2021 ). Self-directed learning involves actively planning, monitoring, and evaluating one’s learning, and can include elements such as selecting one’s learning goals and learning sources (Dolmans, 2021 ; Loyens et al., 2008 ). We focus on three distinct, but closely related, and most frequently studied instructional methods within problem-driven learning: PBL, PjBL, and CBL (cf. Nagarajan & Overton, 2019 ).

- Problem-based Learning

In PBL, small student groups work on real-life problems, guided by a teacher (Barrows, 1996 ). Developed in the late 1960s to reform medical education at McMaster University (Canada), PBL aimed to boost student motivation and learning (Spaulding, 1968 ; Wijnia & Servant-Miklos, 2019 ). Involving first-year students with patient-related problems was expected to make learning more meaningful and motivating (Servant-Miklos, 2019 ; Spaulding, 1969 ). PBL was further refined at Maastricht University (the Netherlands) and adopted in various curricula and courses worldwide (Servant-Miklos et al., 2019 ), often with the instructional aim to foster intrinsic motivation and other important outcomes such as knowledge retention, and professional skills (Barrows, 1986 ; Hmelo-Silver, 2004 ; Norman & Schmidt, 1992 ).

PBL’s key features are: (a) starting the learning process with a problem to activate students’ prior knowledge and interest in learning; (b) student-centered, active learning; (c) small group collaboration (5 to 12 students); (d) teacher guidance; and (e) self-directed learning with adequate self-study time (Barrows, 1996 ; Hmelo-Silver, 2004 ; Schmidt et al., 2009 ).

The problem can be a case, story, visual prompt, or phenomenon that needs explaining (Barrows, 1996 ). These problems are designed to be optimally challenging and—combined with appropriate teacher scaffolding—could enhance students’ motivational beliefs and values (Belland et al., 2013 ). Schmidt et al. ( 2007 ) provided an example of the problem “Little Monsters” from a clinical psychology course focused on the phenomenon “phobias”:

Coming home from work, tired and in need of a hot bath, Anita, an account manager, discovers two spiders in her tub. She shrinks back, screams, and runs away. Her heart pounds, a cold sweat is coming over her. A neighbor saves her from her difficult situation by killing the little animals using a newspaper. (p. 92)

A PBL cycle involves (a) defining the problem and identifying knowledge gaps through discussion, (b) information gathering and self-study, and (c) debriefing or reporting (Barrows, 1985 ; Schmidt, 1983 ). In the initial phase, students use prior knowledge and common sense to discuss the problem, leading to the identification of knowledge gaps that result in self-study questions (i.e., learning goals). The experience of these knowledge gaps has been shown to trigger students’ interest in further learning (Rotgans & Schmidt, 2011 , 2014 ).

The self-study phase varies from several hours to days or a week, depending on the problem’s complexity and the student population (Wijnia et al., 2019 ). During self-study, students gather new information by reading (e.g., books, articles, and websites), consulting experts, or conducting experiments (Gallagher et al., 1995 ; Poikela & Poikela, 2006 ; Schmidt et al., 2009 ). These information sources can be student-selected, instructor-suggested, or both, but having some degree of choice in this selection has been shown to foster students’ competence beliefs and autonomous motivation (Wijnia et al., 2015 ).

After the self-study phase, students return to their groups to discuss their findings and answer the self-study questions (Schmidt, 1983 ). Motivational problems are commonly reported in this phase (Dolmans & Schmidt, 2006 ), possibly due to a drop in students’ interest when they close their knowledge gap (Rotgans & Schmidt, 2014 ). In some implementations, the reporting phase can include project elements, such as a presentation or report (Wijnia et al., 2019 ), which could make learning more meaningful. The collaborative element during the initial discussion and reporting phase can affect motivation both positively (e.g., enjoying social interaction) and negatively (e.g., experiencing pressure to perform well; Wijnia et al., 2011 ). Although students formulate their own learning goals and may select (in part) their information sources, they often need to stay within the boundaries of the problem’s intended learning objectives, limiting the level of student control.

- Project-based Learning

PjBL originated from the project method (Kilpatrick, 1918 ) and was further developed by Blumenfeld and colleagues in the 1990s in science education (Blumenfeld et al., 1991 ; Krajcik & Blumenfeld, 2006 ; Larmer et al., 2015 ; Pecore, 2015 ; Savery, 2006 ). Its central feature is the projects through which students learn a field’s central concepts and principles (Blumenfeld et al., 1991 ; Thomas, 2000 ).

PjBL contains the following components: (a) projects that are relevant to students’ lives, featuring authentic contexts or real-world impact; (b) planning and execution of investigations to answer questions; (c) collaboration with peers, teachers, and sometimes the community; and (d) culmination in a tangible artifact or product (Krajcik & Shin, 2014 ; Larmer et al., 2015 ; Loyens et al., 2022 ; Thomas, 2000 ).

Projects often start with a driving question or problem that is either student-selected or proposed by the teacher. For instance, a middle school science class might explore “What is the quality of water in my river/stream?” (Novak & Krajcik, 2019 ; Singer et al., 2000 ). This question may evolve from initial inquiries into students’ water usage, followed by learning experiences to introduce relevant science topics such as “watershed” and “pollution” (Novak & Krajcik, 2019 ). Students then collaboratively design and conduct investigations to respond to the driving question, culminating in community presentations as the final product. However, driving questions can also be abstract (“When is war justified?”) or focused on finding a solution to a problem (“How can we improve this website so that more people will use it?”; Larmer & Mergendoller, 2010 ).

PjBL possesses several motivating features (Larmer et al., 2015 ). Like PBL, the collaborative aspect can positively and negatively affect students’ motivation; clear group norms and accountability are essential (Blumenfeld et al., 1991 , 1996 ). Second, PjBL gives students a voice and choice (Larmer et al., 2015 ), creating a sense of “ownership” (Blumenfeld et al., 1991 ) that can promote feelings of autonomy (Deci & Ryan, 2000 ). The project’s authenticity also allows students to connect themselves with the learning content, enhancing their interest in and the importance of the project (Blumenfeld et al., 1991 ; Eccles & Wigfield, 2020 ; Renninger & Hidi, 2022 ). PjBL typically offers more student control than PBL or CBL (Loyens & Rikers, 2017 ), and teachers play a critical role in supporting students and fostering their competence beliefs (Blumenfeld et al., 1991 ).

- Case-based Learning

In CBL, students are presented with a specific case that requires them to apply their acquired knowledge (Loyens & Rikers, 2017 ). There is some debate about its origins and relationship with PBL. Some argue that CBL has its roots in the case method employed at Harvard Law School and Harvard Business School (Herreid, 2011 ; Merseth, 1991 ), while others consider modern CBL as a particular form of PBL (Loyens & Rikers, 2017 ; B. Williams, 2005 ). Key features of CBL include: (a) students preparing the case beforehand; (b) students applying their knowledge to solve or explain the case; (c) teachers playing a facilitative role; (d) collaborative case discussions; and (e) typically no post-session self-study (Baeten et al., 2013 ; Loyens & Rikers, 2017 ; Srinivasan et al., 2007 ; Williams, 2005 ).

Cases in CBL often involve realistic problems or scenarios that require solutions or explanations. Cases can vary from a short paragraph (Srinivasan et al., 2007 ) to multiple pages (Baeten et al., 2013 ). In medical education, these cases can offer background information on a patient, including laboratory results, vital signs, and symptoms, similar to PBL (B. Williams, 2005 ). However, unlike PBL, students are often provided with predefined (multiple-choice or open-ended) questions related to the case. These questions are answered in small-group settings under the guidance of a teacher (Baeten et al., 2013 ). Students may also receive articles or books to aid in answering these questions. Due to the predetermined nature of case questions, CBL offers students less autonomy than PBL and PjBL.

Baeten et al. ( 2013 ) described the implementation of CBL in a child development course in a teacher education program. Students received cases (2–16 pages) for specific developmental phases, which they read at home. During the CBL session, students worked in small groups, using a child development book to answer true/false and open-ended questions related to the case. For instance, a case about fourth-grade students might include questions such as: “Fourth graders like construction games. Which examples do you recognize in the case? Relate those construction games to the thinking skills of school children” (Baeten et al., 2013 , p. 490). Afterward, students could correct their answers using an answer key. Similar to PBL and PjBL, the social aspect of CBL, along with the application of knowledge to real-world cases, have been identified as potential motivating factors that enhance students’ interest and their perceptions of the subject’s importance (Baeten et al., 2013 ; Ertmer et al., 1996 ; Loyens et al., 2022 ). In the Appendix, we summarize the most important differences between PBL, PjBL, and CBL.

Research Questions and Moderators

Several reviews and meta-analyses have examined the effects of PBL (e.g., Batdı, 2014 ; Demirel & Dağyar, 2016 ; Dolmans et al., 2016 ; Hung et al., 2019 ; Loyens et al., 2008 , 2023 ; Schmidt et al., 2009 ; Strobel & Van Barneveld, 2009 ; Üstün, 2012 ), PjBL (e.g., Chen & Yang, 2019 ; Guo et al., 2020 ), and CBL (e.g., Maia et al., 2023 ; McLean, 2016 ; Thistlethwaite et al., 2012 ; Üstün, 2012 ) on student outcomes. The effects on students’ achievement have been a primary area of investigation, with some studies reporting positive effects (Chen & Yang, 2019 ; Maia et al., 2023 ), while others indicated mixed effects (Strobel & Van Barneveld, 2009 ; Thistlethwaite et al., 2012 ).

Some meta-analyses have included motivation as an outcome metric along with other variables (e.g., Maia et al., 2023 ; Üstün, 2012 ). For instance, Üstün ( 2012 ) found positive effects of PBL and CBL on science students’ motivation and self-regulated learning, as compared to “traditional” teaching. However, Üstün did not differentiate between these constructs or consider the multifaceted nature of motivation. Additionally, these meta-analyses were confined to specific domains, such as medical or science education.

To our knowledge, this is the first comprehensive meta-analysis investigating the effects on students’ motivation of problem-driven instruction when compared to teacher-centered instruction across diverse academic domains. Our meta-analysis addresses three research questions: 1) What is the effect of student-centered, problem-driven learning on students’ motivation?; 2) Does the effect of student-centered, problem-driven learning differ per motivation construct?; and 3) To what extent do implementation-related nuances moderate the effects of student-centered, problem-driven learning on student motivation?

For our first question, we investigate the combined effect of PBL, PjBL, and CBL on student motivation. This inquiry is essential, as the motivational benefits of problem-driven learning are often assumed, despite evidence highlighting motivational challenges in these methods (Rotgans & Schmidt, 2019 ; Wijnia & Servant-Miklos, 2019 ). Recognizing motivation’s multifaceted nature (Schunk et al., 2014 ), our second question delves into whether the effects of student-centered, problem-driven learning differ per motivation outcome. PBL, PjBL, and CBL are believed to foster intrinsic motivation and value perceptions (Harackiewicz et al., 2016 ; Hmelo-Silver, 2004 ; Larmer et al., 2015 ). Our nuanced approach provides a deeper understanding of the specific effects of problem-driven learning.

Our third question investigates how implementation-related nuances moderate the impact of student-centered, problem-driven learning on student motivation. Given the distinctions in origin and design features among PBL, PjBL, and CBL (see the Appendix), understanding implementation differences is crucial (cf. Hung et al., 2019 ). We examine the following moderators:

Type of problem-driven learning : Learning in the context of real-world problems is considered an essential motivating factor, but differences in the role and timing of the problem and level of student control could potentially result in differential effectiveness of PBL, PjBL, and CBL.

Creation of products : We assess the effect of product creation, which is integral to PjBL, but sometimes incorporated into PBL (Wijnia et al., 2019 ).

Domain: We examine whether the domain (i.e., health-care, STEM, or other) influences the effectiveness of problem-driven learning, considering PBL’s origin in medical education and PjBL’s connection with science education (Barrows, 1996 ; Krajcik & Blumenfeld, 2006 ).

Educational level: Recognizing that PBL and CBL originated in higher education (Barrows, 1996 ; Williams, 2005 ), we explore differences in implementation effectiveness between K-12 and higher education settings. Younger learners may require more preparation before problem-driven learning can be applied (Ertmer & Simons, 2006 ; Simons & Klein, 2007 ; Torp & Sage, 2002 ) and more support from teachers in regulating their learning (Bembenutty et al., 2016 ; Sameroff, 2010 ).

Level of implementation and duration: We also examine differences between curriculum-wide and single-course implementations of problem-driven learning and the duration of the exposure to problem-driven learning (at least 12 weeks or less than 12 weeks; Slavin, 2008 ), as task novelty and variety can trigger students’ interest (Berlyne, 1978 ; Renninger & Hidi, 2011 ), while repeated exposure to PBL can become boring (Wijnia et al., 2011 ).

We also consider several study quality factors (What Works Clearinghouse, 2022 ), such as (a) random assignment to treatment conditions, (b) the quality of the motivation measure (e.g., reliability and the use of validated scales), and (c) the quality of the author’s description of the problem-driven method. Additionally, we assess publication bias by examining if publication type (e.g., articles vs. gray literature) significantly predicts effect size outcomes (Polanin et al., 2016 ).

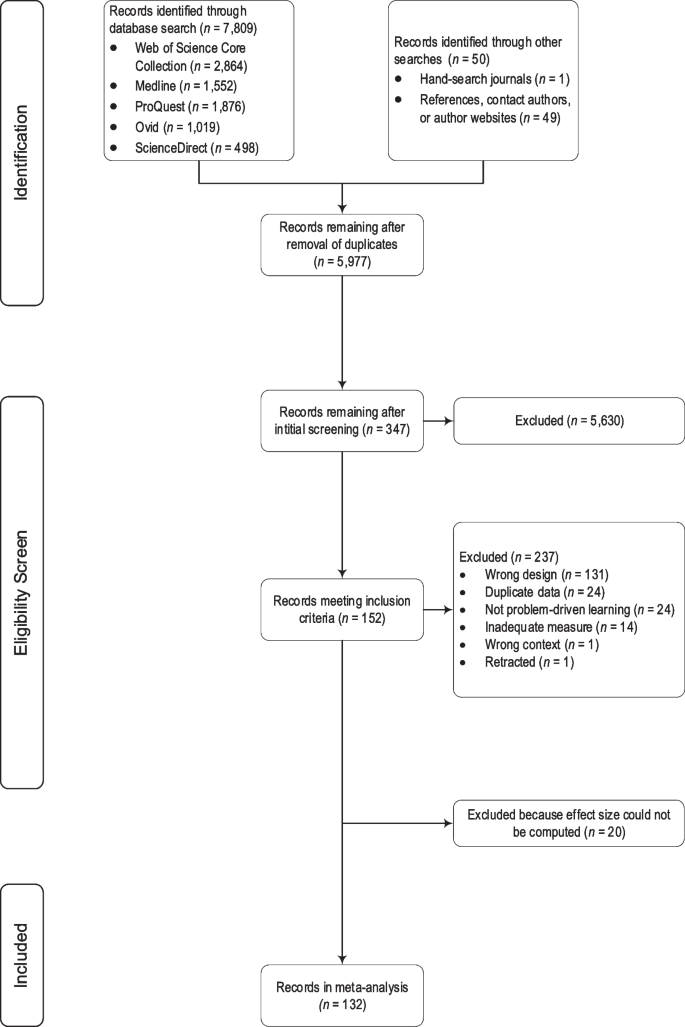

Search Strategy and Selection Process

Figure 2 shows the search and study selection process, following the PRISMA guidelines (Page et al., 2021 ). We systematically searched various databases at [location blinded for review], focusing on manuscripts from 1970 to May 2023 (ProQuest Platform: ERIC, ProQuest Dissertations and Theses Global, and Social Science Database; Web of Science: Core Collection and Medline; OVID: APA PsycINFO; ScienceDirect). Footnote 1 The start date of 1970 was chosen based on when PBL originated (Spaulding, 1969 ). The initial first search was conducted on April 27, 2018 and was updated on May 4, 2023. The search strategies combined 15 search terms for the instructional method with 27 search terms for motivation (see Table S1 , Supplementary Information [SI]).

PRISMA flowchart of the search and study selection process

We screened search results on the Rayyan platform (Ouzzani et al., 2016 ), with 61.09% of titles and abstracts scanned by two authors (96.57% interrater agreement). The remainder of the abstracts were screened by the first author. The Interdisciplinary Journal of Problem-Based Learning and Journal of Problem Based Learning in Higher Education were hand-searched and we screened the reference lists of selected records and meta-analyses.

We used the following inclusion criteria to determine our final sample—studies had to: (a) investigate PBL, PjBL, or CBL; (b) examine a (K-12 or higher education) student sample in a classroom setting; (c) measure one of the motivation constructs depicted in Fig. 1 on a Likert-type/continuous scale; (d) compare the effects of problem-driven learning on motivation with a control group (i.e., “traditional” or “conventional” lecture-based/teacher-centered group) using a quasi-experimental design or randomized controlled trial; (e) be written in English or German; and (f) report sufficient statistical data to compute effect sizes.

When we identified two or more records that contained duplicate data (e.g., dissertations also published as journal articles), we only included one of these records in our final count. We cited the published paper unless additional relevant data or samples were reported in the dissertation. In those instances, we cited both records, but counted them as one record.

Studies were excluded when the instructional method did not match our definition of PBL, PjBL, or CBL (“not problem-driven learning;” e.g., Abate et al., 2022 ). We further excluded studies investigating in-service teachers, residents, or patients and interventions aiming to promote health habits (“wrong context;” e.g., Karimi et al., 2019 ). Moreover, studies with frequency-based measures or measures that did not match our definition of motivation were excluded (“inadequate measure;” e.g., Kolarik, 2009 ). Additionally, records were excluded if they did not have a control group (e.g., single-group pretest–posttest designs; e.g., Liu, 2004 ) Footnote 2 or if it was unclear whether the control group received teacher-centered instruction (“wrong design;” e.g., Huang et al., 2019 ). We contacted the corresponding author when eligible studies did not provide sufficient statistical data to compute effect sizes (e.g., M , SD , n per group and measurement point; see the Effect Size Calculation and Data Analyses section). For 20 papers, we had insufficient information to compute effect sizes.

Coding of Moderators

We coded several characteristics of the research report, sample, treatment, and motivation measures (see Table 1 ). When available, interrater agreement is reported. The quality of the instructional method (low, moderate, high) was coded by two authors independently (78.79% of the 132 included records). We cross-referenced the stated definition and implementation of the method against the defining features of PBL, PjBL, and CBL. We have included coding examples in Sect. 2 of the Supplementary Information .

For each measure, we coded the definition, example items, scale name and source (author-developed or existing scale), and reliability (at least 0.60 or lower than 0.60; What Works Clearinghouse, 2022 ). To ensure the accuracy and consistency of our coding, two authors independently assessed the specific motivation construct targeted by each measure (see Table 1 for a breakdown). When attitude (e.g., toward a STEM subject) was measured, scores for interest (i.e., feelings-related value), importance, and competence beliefs were assigned to those categories; while an extra classification was introduced for subscales concentrating on career aspirations, labeled as “future interest.” For measures producing only a cumulative or general score, we designated the category “attitude (general measure).” Additionally, two authors independently rated the motivation constructs’ quality, classifying them as low, moderate, or high quality. In cases where established and reliable measures were employed, the rating tended to be higher, contingent on how the scale was used. For instance, if the original scale encompassed multiple subscales, yet the author exclusively reported an overall “motivation” score, this led to a less favorable quality rating. In scenarios where scales were inadequately delineated and sample items remained inaccessible, the ratings were also comparatively lower.

For the moderators, educational level, academic domain, implementation level, and duration, two authors independently coded 20.17% of the records remaining after initial screening, Footnote 3 which falls within the guidelines from O’Connor and Joffe ( 2020 ) for double coding for qualitative research. The remainder of the records were coded for these moderators by the first author. To ensure that no mistakes were made by the first author in coding these moderators, three research assistants additionally coded a subsample of the records for comparison.

Effect Size Calculation and Data Analyses

Our final sample consisted of 132 records. Seven records reported data for two studies or student groups (e.g., gender or cohort) and several records reported results for more than one motivation measure, resulting in 139 subsamples ( N = 20,154) and 288 effect sizes. Standardized men difference effect sizes (Cohen’s d ; < 0.20 = trivial, 0.20 = small, 0.50 = medium, 0.80 = large) were calculated in Comprehensive Meta-Analysis statistical software (version 4.0; Borenstein et al., 2009 ). We calculated a combined mean and standard deviation for the PBL groups (Higgins & Green, 2011 ) when two PBL groups were compared with one control group (Lykke et al., 2015 ; Mykyten et al., 2008 ; Williams et al., 1998 ),

For the studies with independent groups post-test only designs , we used both groups’ means, standard deviations, and sample sizes to calculate the effect sizes. If standard deviations were not reported, we used the t -value or p -value and sample size, and if reported, the means or mean difference of both groups. For independent groups pre-posttest designs , we used sample sizes, the pre- and posttest means and standard deviations of the groups, and the correlation between pre- and posttest scores to compute effect sizes. If the pretest–posttest correlation was unavailable or could not be calculated with the reported statistics (see Higgins & Green, 2011 ), we assumed a correlation of 0.50, because the available correlations in our dataset suggested that most pre-post measures were moderately correlated, which also corresponds with other research on motivation (see Cook et al., 2011 ). If means and standard deviations were not reported separately for the pre- and posttest, we used the mean change in each group and the standard deviation of the difference scores. If standard deviations were unavailable, we used the mean change in each group, F for the difference between changes, and sample size to compute effect sizes. If an intervention study reported three time-points (i.e., pretest, mid-intervention, and posttest), we only included the pre- and posttest scores (e.g., LeJeune, 2002 ). Post-score standard deviations were used to standardize the effect size of independent groups post-test only designs because, in most studies, the correlation could not be derived with high precision (Borenstein et al., 2009 ) and because we were mainly interested in the main effect of problem-driven learning (Grgic et al., 2017 ).

For most motivation constructs, a higher mean/increase for the treatment (problem-driven learning) relative to the control group indicated a positive effect (unless otherwise stated). However, increases in posttest scores or higher means/increases for the treatment relative to the control group for controlled motivation and performance goals or for scales evaluating demotivation were coded as negative effects.

Analyses were performed using Comprehensive Meta-Analysis statistical software (version 4.0; Borenstein et al., 2009 ) and R Statistical Software (v4.3.2; R Core Team, 2021 ) using several packages designed for meta-analysis (Harrer et al., 2022 ). To answer Research Question 1, we used random-effects models to estimate mean effect sizes for all outcomes, in which each study was weighted by the inverse of its variance (Borenstein et al., 2009 ; Harrer et al., 2022 ; Lipsey & Wilson, 2001 ). We calculated the I 2 (25% = low, 50% = moderate, 75% = high heterogeneity) and Q statistics to assess statistical heterogeneity (Borenstein et al., 2009 ; Higgins & Thompson, 2002 ). To answer our second and third research questions, we conducted several moderator analyses. Additionally, we performed moderator analyses for certain quality indicators (e.g., randomization). We also calculated one effect size per motivation construct and instructional method. Finally, we assessed publication bias.

Descriptives for the Final Sample

Tables S1 to S4 (see SI) present an overview of the characteristics of all included records. PBL was investigated most often (83 subsamples, 59.71%), followed by PjBL (37 subsamples, 26.62%) and CBL (19 subsamples, 13.67%). Studies covered data from 24 different countries, with most studies conducted in the US (36 subsamples, 25.90%) or Turkey (35 subsamples, 25.18%). More than half of the studies took place in higher education settings (83 subsamples, 59.71%), followed by Grades 7–12 (41 subsamples, 29.50%) and Grades 1–6 (15 subsamples, 10.79%). Although PBL and CBL were popular in the healthcare domain (34 subsamples, 24.46%), most studies were conducted in the STEM domain (74 subsamples, 53.24%). Other investigated academic disciplines included social studies, psychology, economics, and law (31 subsamples, 22.30%). Motivation was measured as part of the main research questions and hypotheses in most of the subsamples (77%); in the other subsamples, motivation was measured as a supplemental outcome.

Research Question 1: Combined Effect of Problem-Driven Learning on Motivation

We first examined the overall effect of student-centered, problem-driven learning on students’ motivation. We first performed a random-effects model meta-analysis with restricted maximum likelihood with subsample as the unit of analysis in the package meta (Balduzzi et al., 2019 ), resulting in a moderate, positive effect of d = 0.504, (95% CI [0.363, 0.644]). When we examined outliers, we identified 4 subsamples (2.88%) with an effect size falling outside 3 SD of the overall effect of d = 0.504 (1 negative, and 3 positive effects). We conducted a leave-one-out analysis on the two-level model to examine the effect of these outliers. Our results revealed that effect sizes were between 0.47–0.53. The 95% CIs remained stable between [0.35, 0.60] to 0.40, 0.66], indicating the CIs did not seem to be affected by one influential subsample; therefore, we did not exclude these studies from our analyses.

Because several records reported results on multiple motivation measures and/or samples, our data was nested. Therefore, we tested whether modeling of the nested data structure improved our estimate of the pooled effect of problem-driven learning on students’ motivation. We compared the results of a four-level, three-level, and two-level meta-analysis, using the metafor package with restricted maximum likelihood (Viechtbauer, 2010 ; see Table 2 ). Lower values of AIC and BIC, and a significant likelihood ratio test ( p < 0.05), indicate a better fit. We found that the three-level model had a significantly better fit compared to the two-level model with level 3 (i.e., subsample) heterogeneity constrained to zero. When comparing the four-level and three-level model, results were mixed. BIC values indicated a better fit for the three-level model, whereas AIC and the results of the likelihood ratio test indicated a better fit for the four-level model. We have selected the three-level model, since it is less complex than the four-level model and because the estimated between-record variance was relatively low ( T 2 Level 4 = 0.05).

The pooled effect size based on the three-level meta-analytic model was d = 0.498 ( SE = 0.07, 95% CI [0.354, 0.641]), p < 0.001; indicating a small, positive effect. The effect was heterogenous, Q (287) = 1092.98, p < 0.001, which implies that the variability in effect sizes has sources other than sampling error, and moderators should be examined. The estimated variance components were T 2 Level 3 = 0.58 and T 2 Level 2 < 0.001. This means that I 2 Level 3 = 75.12% of the total variation can be attributed to between-cluster, and I 2 Level 2 = 0% can be attributed to within-cluster heterogeneity; indicating that there are differences between studies, but estimates within studies do not vary more than one would expect based on sampling variability alone.

Research Question 2: Differential Effects per Motivation Construct

Because motivation is a multifaceted construct, we examined whether effects of problem-driven learning differed per motivation construct. We examined the moderating effect of motivation constructs in two ways. We first conducted a subgroup analysis with motivation construct as the moderator in the three-level model to test if the test of moderators was statistically significant. We further extended this model to a Correlated and Hierarchical Effects (CHE) Model with robust variance estimation, using the clubSandwich and metafor packages (Pustejovsky, 2022 ; Viechtbauer, 2010 ). The CHE model takes into account that some effect sizes within clusters are based on the same subsample, and that their sampling errors are correlated. We assumed a constant sampling correlation of ρ = 0.60 (Harrer et al., 2022 ). In Table 3 , we have reported the results, in which the moderation analyses are expressed as a meta-regression model with a dummy-coded predictor.

The test of moderators in the three-level model revealed that the type of motivation construct did not statistically significantly moderate the combined effect of student-centered, problem-driven learning on motivation, F (4, 283) = 2.08, p = 0.084. However, results of the CHE model indicated that the effect of problem-driven learning on students’ reasons for studying was statistically significantly lower compared to effects on other motivation constructs. Small to moderate positive effects of problem-driven learning were found on students’ attitude, beliefs (i.e., competence and control beliefs), values, and general motivation, whereas a trivial, positive ( d < 0.20) effect was found for students’ reasons for studying.

Research Question 3: Role of Implementation and Sample Characteristics

Subsequently, we examined the moderating role of several implementation and sample characteristics. We used a similar analytic approach as for the subgroup analysis with motivation construct as the moderator. First, we examined possible differential effects of PBL, PjBL, and CBL on students’ overall motivation (see Table 3 ). Our analyses indicated that PBL, PjBL, and CBL had similar effects on students’ motivation, F (2, 285) = 0.61, p = 0.546. We further looked for differences per motivation construct using Comprehensive Meta-Analysis with subsample as the unit of analysis (see Table 4 ). We found a statistically significant difference among the three instructional methods only for students’ future interest, but this effect was based on just 6 studies (3 PBL and 3 PjBL) studies and must, therefore, be interpreted with caution.

We further investigated several other moderators related to the implementation of problem-driven learning, such as the creation of products (e.g., website, report, presentation) as part of the learning process, academic domain, educational level, level of implementation (i.e., curriculum-wide or single-course implementation), and duration of the exposure/intervention (i.e., 12 + weeks or shorter). For these analyses, we only examined the overall effect on motivation in problem-driven learning in the three-level and CHE model (see Table 3 ). Our analyses revealed that there were no differences between implementations with or without product creation on motivation. We found statistically significant differences for the moderators “academic domain,” and “level of implementation.” Moderate, positive effects were found for studies conducted in the STEM and healthcare domains, whereas a trivial, positive effect was found in other domains. Additionally, in our CHE model, we found a moderate, positive effect size for single-course implementations and interventions compared to a trivial, positive effect for curriculum-wide implementations.

Quality as a Moderator

We further examined several quality indicators, such as randomization, the reliability of the measure (at least 0.60), the use of author-developed vs. existing measures, and our own quality ratings of the motivation measure and the implementation of problem-driven learning (see Table 3 ). Of these quality indicators, only our rating of the motivation measure’s quality moderated the effect of problem-driven learning on motivation. Results of our CHE model revealed a small, positive effect size for motivation constructs rated high in quality (in terms of description and measurement) compared to moderate, positive effects for moderate and low-quality ratings. Reliability and type of scale (author-developed vs. existing measure) did not statistically significantly moderate the effect of problem-driven learning on motivation, but a lower SE (i.e., more precise estimates) was found for scales with a reliability of at least 0.60. Our rating of the quality of the description and implementation of the instructional method did not moderate the effect of problem-driven learning. Finally, publication type (journal article vs. gray literature) did not have a statistically significant moderating effect.

Publication Bias

Publication bias analyses were conducted in Comprehensive Meta-Analysis. To assess publication bias, we inspected the funnel plots by plotting the individual study effect size against the standard error of these effect size estimates. Furthermore, we inspected Egger’s regression intercept (Egger et al., 1997 ), and applied Duval and Tweedie’s ( 2000 ) trim-and-fill technique for the effect of student-centered, problem-driven learning on motivation. The funnel plot for all problem-driven learning methods combined indicated publication bias (see SI, Fig. S1 ). Egger’s linear regression test indicated the presence of funnel plot asymmetry, t (137) = 4.19, p < 0.001. Duval and Tweedie’s trim-and-fill technique (36 studies trimmed at the right side) resulted in adjustment of the effect size from 0.50 to 0.766 (95% CI [0.626, 0.907]).

There is a prevailing belief that student-centered, problem-driven learning methods can foster students’ motivation, despite evidence highlighting motivational challenges in these methods (Rotgans & Schmidt, 2019 ; Wijnia & Servant-Miklos, 2019 ). This meta-analysis investigated the effects of PBL, PjBL, and CBL on students’ motivation. We additionally examined the effects of several implementation and sample characteristics.

Effects of Student-Centered Problem-Driven Learning on Students’ Motivation

We found a small to moderate, positive effect of problem-driven learning on students’ motivation, suggesting that these student-centered learning methods can be motivating. However, they were not equally effective for each motivation construct. Small or moderate effects were found for students’ attitudes toward STEM or other school subjects, beliefs, values, and general motivation. A trivial, positive effect was found for intrinsic and extrinsic motivation (i.e., reasons for studying), indicating that overall, problem-driven learning has less impact on students’ reasons for studying.

There was no statistically significant difference regarding the effects of PBL, PjBL and CBL on students’ motivation (all constructs combined), suggesting that all three instructional methods have the potential to increase students’ motivation. We found a difference only for students’ future interest (e.g., career interest), but this effect was based on just 6 studies (3 for PBL and 3 for PjBL), and must therefore be interpreted with caution. Although each method has its unique defining features (see the Appendix), overall, these differences did not seem to affect student motivation in our study.

A core principle of problem-driven learning is learning in the context of real-word problems (Dolmans, 2021 ) that aim to spark students’ interest and increase their perceptions of meaningfulness (e.g., Blumenfeld, 1992 ; Blumenfeld et al., 1991 ; Harackiewicz et al., 2016 ; Larmer et al., 2015 ; Schmidt et al., 2011 ). Overall, our results suggest that PBL, PjBL, and CBL can indeed positively affect students’ enjoyment and interest, perceptions of importance, and attitudes toward STEM or other school subjects. Furthermore, the problems used in problem-driven learning are designed to be optimally challenging. Prior research has shown that optimally challenging tasks can help promote students’ competence beliefs because prior knowledge can be activated (Deci & Ryan, 2000 ; Katz & Assor, 2007 ; Pintrich, 2003b ), which could explain the positive effects that we found on students’ competence beliefs.

As mentioned, we found a trivial, positive effect of problem-driven learning on students’ reasons for studying, which consisted of constructs measuring intrinsic motives (including mastery goals and autonomous motivation) and extrinsic motives (including performance goals and controlled motivation). Fostering students’ intrinsic motivation is often mentioned as an important instructional goal of PBL, along with goals such as building a flexible knowledge base and improving students’ collaborative and self-directed learning skills (see Barrows, 1986 ; Hmelo-Silver, 2004 ; Norman & Schmidt, 1992 ). Despite this aim, our results suggest that problem-driven learning only has a trivial effect on students’ intrinsic motivation.

At first glance these results might seem contradictory, as we did find positive effects on students’ perceptions of task value, consisting of interest/enjoyment and importance. As discussed in the introduction, intrinsic motivation differs from interest value in expectancy–value theory (Eccles & Wigfield, 2020 ) and interest (Hidi, 2006 ; Renninger & Hidi, 2022 ). Interest value concerns the source of the activity’s value (Eccles, 2005 ) and involves a person's interaction with the environment (Renninger & Hidi, 2022 ). In contrast, intrinsic motivation focuses on the origin of students’ decision to engage in a task, driven by their beliefs (Eccles, 2005 ; Renninger & Hidi, 2022 ). This distinction may have affected our results.

Another explanation could be the way in which intrinsic motives were operationalized in most studies. When we looked more closely at the studies in which students’ reasons for studying were measured, most subsamples employed measures that focused on the classical distinction between intrinsic versus extrinsic motivation, for example, as measured with the intrinsic and extrinsic goal orientation scales from the Motivated Strategies for Learning Questionnaire (MSLQ; Duncan & McKeachie, 2005 ; Pintrich et al., 1991 ). However, this distinction is outdated and no longer in line with how motivation is operationalized in popular theories such as achievement goal theory (Urdan & Kaplan, 2020 ) and self-determination theory (Ryan & Deci, 2020 ). To gain more insight into the effectiveness of problem-driven learning, more studies are needed that move away from the classical distinction between intrinsic and extrinsic motivation.

Implementation and Sample Characteristics as Moderators

In addition to type of problem-driven learning, we examined various moderators that could affect the effect of PBL, PjBL, and CBL on motivation. We assessed the effect of product creation as part of the learning process. Creating a product is a defining feature of PjBL (Thomas, 2000 ), but is sometimes also incorporated into PBL (Wijnia et al., 2019 ). Our results, however, did not find a statistically significant moderating effect of product creation.

We further examined the role of academic domain and educational level. PBL and CBL originated in higher education and are popular in medical education and nursing programs (Barrows, 1996 ; Williams, 2005 ), and PjBL has a strong connection with science education (Krajcik & Blumenfeld, 2006 ). Our results revealed stronger, positive effect sizes for problem-driven learning in the STEM and healthcare domains compared to other domains. Possibly, this effect is due to the fact that problem-driven learning is strongly connected with these domains. For instance, PBL was developed in medical education and many of the books and process models describing how to implement PBL were developed in medical education as well, making it easier for others to implement it in the same domain (see Wijnia et al., 2019 ). It is also possible that it is easier to connect the learning with real-world problems in these domains, and that selecting and creating context-appropriate problems may be more challenging in other domains (Hung, 2019 ).

We did not find a statistically significant moderating effect for educational level, indicating that positive effects on motivation can be found in K-12 and higher education settings. Nevertheless, several researchers have suggested that younger learners need more preparation before a new instructional method can be applied (Ertmer & Simons, 2006 ; Simons & Klein, 2007 ; Torp & Sage, 2002 ), and younger learners may struggle more with the level of student control that is offered in these instructional methods. Students need a certain level of self-regulated learning (SRL) skills to plan, monitor, and evaluate their learning in student-centered learning (Azevedo et al., 2012 ). However, K-12 learners are still developing their SRL skills and require more support from others in regulating their learning than adult learners (Bembenutty et al., 2016 ; Sameroff, 2010 ), and therefore may seek more assistance from their teachers to cope with student control. Given the delicate balance required in these student-centered approaches—between providing support and allowing students to navigate the problem-solving process collaboratively with their peers—K-12 teachers could be more prone to over-supporting their students or providing too little support, leading to increased frustration for students with less-developed SRL strategies. Therefore, teachers may face certain challenges if they want to implement problem-driven learning in their K-12 classroom. They must take on a new facilitative or coaching role (Ertmer & Simons, 2006 ) and need to support students’ SRL development, which can be challenging (De Smul et al., 2018 ). These issues need to be considered when implementing problem-driven learning.

We further examined the moderating effects of level of implementation in the curriculum and duration. Our results revealed larger, positive effect sizes for studies that investigated single-course implementations or interventions when compared to curriculum-level approaches. These results could suggest that student-centered, problem-driven learning is more effective for short-term motivation than long-term motivation. Variety and novelty can spark curiosity and interest (Berlyne, 1978 ). Therefore, a change in the instructional method or approach could have a short-term motivating effect. However, duration did not have a statistically significant moderating effect. Another issue faced by curriculum-level approaches is that active student-centered programs eventually can show “signs of erosion” (Moust et al., 2005 ) or those involved can become bored with the process (Wijnia et al., 2011 ). In these programs, students and staff members can start to behave in ways that are not in accordance with the instructional method, such as increasing the number of students in a workgroup, skipping steps in the process of analyzing a problem or case, or having teachers take on a directive and dominant role in the learning process. These “signs of erosion” could explain why we found a smaller effect of problem-driven learning on students’ motivation in curriculum-level approaches.

Quality Indicators as Moderators and Publication Bias

We further assessed whether certain quality indicators affected our results, such as randomization, the reliability and source of the scale, and our own ratings of the quality of the measure and the implementation of the instructional method. Of these moderators, only our rating of the measure’s quality moderated the effects. Motivation measures that were rated as higher in quality had lower effect sizes compared to measures rated as low or moderate in quality. The results for reliability of the scale showed that measures with a reliability of 0.60 or higher resulted in more precise estimation of the effect size (i.e., with a lower SE ). A recommendation for researchers in future studies is, therefore, to check the psychometric properties of the scales.

We included gray literature as a way to prevent publication bias (Polanin et al., 2016 ). Although publication type did not moderate the overall effect of problem-driven learning on student motivation, our analyses did reveal publication bias, indicating we need to interpret the results of this meta-analysis with caution.

Limitations and Future Research

A limitation of our study is that the quality of some of the included studies was not optimal, despite the majority (67.6%) being published in peer-reviewed journals. For example, several studies did not report how students were assigned to treatment conditions, used self-constructed measures, or did not clearly describe how problem-driven learning was implemented. There is a need for more randomized controlled trials to investigate the effects of educational methods, as random assignment eliminates bias and because small, quasi-experimental studies can overstate the effect of educational interventions (Cheung & Slavin, 2016 ). Furthermore, future studies could consider What Works Clearinghouse ( 2022 ) guidelines when designing problem-driven learning interventions targeting students’ motivation.

All motivation measures were coded and placed in categories by two authors, to ensure that the constructs were sufficiently similar to include in the same category. However, motivation is a complex construct (Murphy & Alexander, 2000 ). An important limitation of our study is the quality of the measures used in our own study. Although we based the categories in our coding scheme on prior classifications of motivation constructs, differences in the operationalization of measures, even for constructs derived from the same theory, can affect the outcomes in a meta-analysis (Hulleman et al., 2010 ).

The quality of some of the motivation measures in the included studies was not optimal. Many authors did not examine the reliability of the measure they used. Furthermore, authors often used dated scales, such as the MSLQ (Pintrich et al., 1991 ). The MSLQ is a popular measure, as it was designed to measure multiple relevant motivational elements from multiple motivation theories in one survey, and it has been applied in different educational contexts and countries (Credé & Phillips, 2011 ). It remains a well-cited and well-used scale (e.g., Wang et al., 2023 ); therefore, it makes sense that it is also a popular measure among researchers interested in examining the impact of problem-driven learning on student motivation. Nevertheless, several items have undesirable psychometric properties (Credé & Phillips, 2011 ), and the measure no longer addresses all the nuances of the major motivation theories in educational psychology. The use of the MSLQ in multiple studies meant that our meta-analysis could not provide insights into the effects of problem-driven learning on different types of achievement goals (Elliot et al., 2011 ) and different types of extrinsic motivation as distinguished in self-determination theory (Deci & Ryan, 2000 ). This limits what we know about the effects on problem-driven learning on the reasons and goals for students’ studying or engagement in learning tasks. To investigate the effects of instructional methods on motivation, future researchers need to use measures that are well-aligned with and take into account recent developments in motivational theories.

Although results revealed a positive effect for student-centered, problem-driven learning, there was large heterogeneity among studies. Even though we determined for each study whether PBL, PjBL or CBL was implemented, differences could still exist among the different instructional methods (Maudsley, 1999 ), which might have affected the outcomes. We examined the effects of several moderators. Other interesting moderators could have been the level of preparation/training students received before the method was implemented, the level of professional development training teachers received, and the exact amount of student control offered. Unfortunately, these aspects could not be adequately coded based on the information in the included records. Furthermore, more research is needed on the different conditions and characteristics that determine how and when these instructional methods work (Hung et al., 2019 ).

Implications for Practice and Research

Our results demonstrated lower effect sizes for implementations outside the STEM and healthcare domains. These disparities point to key considerations that can enhance the success of problem-driven learning methods. A pivotal determinant of the efficacy of problem-driven learning for learning and motivation lies in the quality of the chosen case, problem, or project (Hung, 2019 ; Larmer et al., 2015 ), as well as the adeptness of teachers in facilitating and scaffolding student learning (Belland et al., 2013 ). Problem design is a critical step in problem-driven learning, and it is important that the problem is well-aligned with the learning objectives of the course and offers an appropriate real-world context (Hung, 2019 ). The role of teacher scaffolding also cannot be ignored. Teachers face many challenges if they want to implement problem-driven learning methods, as they have to take on a new facilitative or coaching role (Ertmer & Simons, 2006 ; Kokotsaki et al., 2016 ; Wijnen et al., 2017 ). Because teachers often feel uncertain about their abilities to promote and teach students SRL skills (De Smul et al., 2018 ), this could require further teacher training and professional development on supporting students’ SRL and student self-directedness (Heirweg et al., 2022 ).

Furthermore, our results suggest a potential dampening effect associated with curriculum-level implementations. As mentioned, curriculum-level approaches in PBL can show “signs of erosion” (Moust et al., 2005 ), such as skipping the initial discussion of a problem. Given that the problem's role is paramount in sustaining student motivation (Rotgans & Schmidt, 2011 , 2014 ), this step should not be skipped. Furthermore, curriculum-level approaches could consider incorporating more variation in their program and switching between different types of problem-driven learning to prevent boredom with the process (Wijnia et al., 2011 ).

It is a prevailing assumption that student-centered, problem-driven learning can increase students’ motivation (Rotgans & Schmidt, 2019 ; Wijnia & Servant-Miklos, 2019 ). The results of our meta-analysis, however, reveal nuanced insights. We observed, a small to moderate, positive, effect of PBL, PjBL, CBL on students’ motivation (compared to teacher-centered learning). This effect is more pronounced for students’ competence beliefs, perceptions of value, and attitudes toward school subjects, such as science. Concerning students’ reasons for studying, we found a trivial, positive effect. The latter effect conflicts with the popular belief that methods such as PBL can increase students’ intrinsic motivation for studying (e.g., Hmelo-Silver, 2004 ).

Our results further indicated that PBL, PjBL, and CBL have similar effects on motivation. However, analyses revealed that effects were larger in STEM and healthcare domains compared to other domains. Single-course implementations and interventions also resulted in larger, positive effects, suggesting that a novelty effect might be at work. In conclusion, our meta-analysis offers a balanced perspective on the influence of problem-driven learning on student motivation. While the impact is generally positive, the intricate interplay of factors such as academic domain and implementation level underscores the need for a nuanced approach to leveraging these instructional methods effectively with regard to increasing student motivation.

Data Availability

The data file and script of this study are available at https://osf.io/wrxts/?view_only=abf34b8a6dfe490fa549d2387e7ed896 .

ScienceDirect was only searched in May 2023. Web of Science Core Collection is a collection of different databases. The following databases were included in our search: Science Citation Index Expanded (SCI-EXPANDED – 1975-present), Social Sciences Citation Index (SSCI) –1975-present, Arts & Humanities Citation Index (A&HCI) – 1975-present, Conference Proceedings Citation Index – Science (CPCI-S) – 1990-present, Conference Proceedings Citation Index – Social Science & Humanities (CPCI-SHH) – 1990-present, Emerging Sources Citation Index (ESCI) – 2005-present.

Initially, we did intend to include studies with single-group pre-post design. Because pre-post effect sizes can lead to biased outcomes, we have removed them from our meta-analysis (Cuijpers et al., 2017 ). Analyses regarding the effect of problem-driven learning on motivation in pretest–posttest designs are reported in the Supplementary Information (Sect. 4).

Certain double-coded articles were eventually excluded because they had a single-group pre-post design; we did, however, utilize them for the calculation of interrater reliability.

References included in the meta-analysis are listed in the Supplementary Information

Abate, A., Atnafu, M., & Michael, K. (2022). Visualization and problem-based learning approaches and students’ attitude toward learning mathematics. Pedagogical Research, 7 (2), em0119. https://doi.org/10.29333/pr/11725

Article Google Scholar

Ajzen, I. (1991). The theory of planned behavior. Organizational Behavior and Human Decision Processes, 50 (2), 179–211. https://doi.org/10.1016/0749-5978(91)90020-T

Assor, A., Vansteenkiste, M., & Kaplan, A. (2009). Identified versus introjected approach and introjected avoidance motivations in school and in sports: The limited benefits of self-worth strivings. Journal of Educational Psychology, 101 (2), 482–497. https://doi.org/10.1037/a0014236

Azevedo, R., Behnagh, R. F., Duffy, M., Harley, J. M., & Trevors, G. (2012). Metacognition and self-regulated learning in student-centered learning environments. In D. Jonassen & S. Land (Eds.), Theoretical foundations of learning environments (2nd ed., pp. 171–197). Routledge.

Google Scholar

Baeten, M., Dochy, F., & Struyven, K. (2013). The effects of different learning environments on students’ motivation for learning and their achievement. British Journal of Educational Psychology, 83 (3), 484–501. https://doi.org/10.1111/j.2044-8279.2012.02076.x

Balduzzi, S., Rücker, G., & Schwarzer, G. (2019). How to perform a meta-analysis with R: A practical tutorial. Evidence-Based Mental Health, 22 , 153–160. https://doi.org/10.1136/ebmental-2019-300117

Bandura, A. (1977). Self-efficacy: Toward a unifying theory of behavioral change. Psychological Review, 84 (2), 191–215. https://doi.org/10.1037/0033-295X.84.2.191

Bandura, A. (1997). Self-efficacy: The exercise of control . W H Freeman/Times Books/ Henry Holt & Co.

Bandura, A. (2006). Guide for constructing self-efficacy scales. In F. Pajares & T. Urdan (Eds.), Self-efficacy beliefs of adolescents (pp. 307–337). Information Age Publishing.

Barrows, H. S. (1985). How to design a problem-based curriculum for the preclinical years . Springer.

Barrows, H. S. (1986). A taxonomy of problem-based learning methods. Medical Education, 20 (6), 481–486. https://doi.org/10.1111/j.1365-2923.1986.tb01386.x

Barrows, H. S. (1996). Problem-based learning in medicine and beyond: A brief overview. New Directions in Teaching and Learning, 1996 (68), 3–12. https://doi.org/10.1002/tl.37219966804

Batdı, V. (2014). The effects of a problem based learning approach on students’ attitudes levels: A meta-analysis. Educational Research and Reviews, 9 (9), 272–276. https://doi.org/10.5897/ERR2014.1771

Belland, B. R., Kim, C., & Hannafin, M. J. (2013). A framework for designing scaffolds that improve motivation and cognition. Educational Psychologist, 48 (4), 243–270. https://doi.org/10.1080/00461520.2013.838920

Bembenutty, H., White, M. C., & DiBenedetto, M. K. (2016). Applying social cognitive theory in the development of self-regulated competencies throughout K-12 grades. In A. A. Lipnevich, F. Preckel, & R. D. Roberts (Eds.), Psychosocial skills and school systems in the 21st century: Theory, research, and practice (pp. 215–239). Springer.

Chapter Google Scholar

Berlyne, D. E. (1978). Curiosity and learning. Motivation and Emotion, 2 (2), 97–175. https://doi.org/10.1007/BF00993037

Black, A. E., & Deci, E. L. (2000). The effects of instructors’ autonomy support and student’ autonomous motivation on learning organic chemistry: A self-determination theory perspective. Science Education, 84 (6), 740–756. https://doi.org/10.1002/1098-237X(200011)84:6%3c740::AID-SCE4%3e3.0.CO;2-3

Blumenfeld, P. C. (1992). Classroom learning and motivation: Clarifying and expanding goal theory. Journal of Educational Psychology, 84 (3), 272–281. https://doi.org/10.1037/0022-0663.84.3.272

Blumenfeld, P. C., Marx, R. W., Soloway, E., & Krajcik, J. (1996). Learning with peers: From small group cooperation to collaborative communities. Educational Researcher, 25 (8), 37–39. https://doi.org/10.3102/0013189X0250080

Blumenfeld, P. C., Soloway, E., Marx, R. W., Krajcik, J. S., Guzdial, M., & Palincsar, A. (1991). Motivating project-based learning: Sustaining the doing, supporting the learning. Educational Psychologist, 26 (3–4), 369–398. https://doi.org/10.1080/00461520.1991.9653139

Borenstein, M., Hedges, L. V., Higgins, J. P. T., & Rothstein, H. R. (2009). Introduction to meta-analysis . Wiley.

Book Google Scholar

Chen, C. H., & Yang, Y. C. (2019). Revisiting the effects of project-based learning on students’ academic achievement: A meta-analysis investigating moderators. Educational Research Review, 26 (1), 71–81. https://doi.org/10.1016/j.edurev.2018.11.001

Cheung, A. C. K., & Slavin, R. E. (2016). How methodological features affect effect sizes in education. Educational Researcher, 45 (5), 283–292. https://doi.org/10.3102/0013189X16656615

Cook, D. A., Thompson, W. G., & Thomas, K. G. (2011). The motivated strategies for learning questionnaire: Score validity among medicine residents. Medical Education, 45 (12), 1230–1240. https://doi.org/10.1111/j.1365-2923.2011.04077.x

Credé, M., & Phillips, L. A. (2011). A meta-analytic review of the motivated strategies for learning questionnaire. Learning and Individual Differences, 21 (4), 337–346. https://doi.org/10.1016/j.lindif.2011.03.002

Cuijpers, P., Weitz, E., Cristea, I. A., & Twisk, J. (2017). Pre-post effect sizes should be avoided in meta-analyses. Epidemiology and Psychiatric Sciences, 26 (4), 364–368. https://doi.org/10.1017/S2045796016000809

Deci, E. L., & Ryan, R. M. (1985). Intrinsic motivation and self-determination in human behavior . Plenum Press.

Deci, E. L., & Ryan, R. M. (2000). The “what” and “why” of goal pursuits: Human needs and the self-determination of behavior. Psychological Inquiry, 11 (4), 227–268. https://doi.org/10.1207/S15327965PLI1104_01

Demirel, M., & Dağyar, M. (2016). Effects of problem-based learning on attitude: A meta-analysis study. Eurasia Journal of Mathematics, Science and Technology Education, 12 (8), 2215–2137. https://doi.org/10.12973/eurasia.2016.1293a

De Smul, M., Heirweg, S., Van Keer, H., Devos, G., & Vandevelde, S. (2018). How competent do teachers feel instructing self-regulated learning strategies? Development and validation of the teacher self-efficacy scale to implement self-regulated learning. Teaching and Teacher Education, 71 , 214–225. https://doi.org/10.1016/j.tate.2018.01.001

Dolmans, D. H. J. M. (2021). How can we look at PBL practice and research differently? [Keynote address]. American Educational Research Association, online meeting. https://eur.cloud.panopto.eu/Panopto/Pages/Viewer.aspx?id=baf192b1-8989-45b9-b7af-ad070006a35b

Dolmans, D. H. J. M., Loyens, S. M. M., Marcq, H., & Gijbels, D. (2016). Deep and surface learning in problem-based learning: A review of the literature. Advances in Health Sciences Education, 21 (5), 1087–1112. https://doi.org/10.1007/s10459-015-9645-6

Dolmans, D. H. J. M., & Schmidt, H. G. (2006). What do we know about cognitive and motivational effects of small group tutorials in problem-based learning? Advances in Health Sciences Education, 11 (4), 321–336. https://doi.org/10.1007/s10459-006-9012-8

Dolmans, D. H. J. M., Wolfhagen, I. H. A. P., Van der Vleuten, C. P. M., & Wijnen, W. H. F. W. (2001). Solving problems with group work in problem-based learning: Hold on to the philosophy. Medical Education, 35 (9), 884–889. https://doi.org/10.1046/j.1365-2923.2001.00915.x

Duncan, T. G., & McKeachie, W. J. (2005). The making of the motivated strategies for learning questionnaire. Educational Psychologist, 40 (2), 117–128. https://doi.org/10.1207/s15326985ep4002_6

Durik, A. M., Vida, M., & Eccles, J. S. (2006). Task values and ability beliefs as predictors of high school literacy choices. Journal of Educational Psychology, 98 (2), 382–393. https://doi.org/10.1037/0022-0663.98.2.382

Duval, S. J., & Tweedie, R. L. (2000). A nonparametric “trim and fill” method of accounting for publication bias in meta-analysis. Journal of the American Statistical Association, 95 (449), 89–98. https://doi.org/10.2307/2669529

Dweck, C. S., & Leggett, C. L. (1988). A social-cognitive approach to motivation and personality. Psychological Review, 95 (2), 256–273. https://doi.org/10.1037//0033-295X.95.2.256

Eccles, J. S. (2005). Subjective task values and the Eccles et al. model of achievement-related choices. In A. J. Elliot & C. S. Dweck (Eds.), Handbook of competence and motivation (pp. 105–121). Guilford.

Eccles, J. S., Adler, T. F., Futterman, R., Goff, S. B., Kaczala, C. M., Meece, J. L., & Midgley, C. (1983). Expectancies, values, and academic behaviors. In J. T. Spence (Ed.), Achievement and achievement motivation (pp. 75–146). W. H. Freeman.

Eccles, J. S., & Wigfield, A. (2020). From expectancy-value theory to situated expectancy-value theory: A developmental, social cognitive, and sociocultural perspective on motivation. Contemporary Educational Psychology, 61 , 101859. https://doi.org/10.1016/j.cedpsych.2020.101859

Eccles, J. S., Wigfield, A., & Schiefele, U. (1998). Motivation to succeed. In W. Damon & N. Eisenberg (Eds.), Handbook of child psychology: Social, emotional, and personality development (pp. 1017–1095). Wiley.

Egger, M., Smith, G. D., Schneider, M., & Minder, C. (1997). Bias in meta-analysis detected by a simple, graphical test. British Medical Journal, 315 (7109), 629–634. https://doi.org/10.1136/bmj.315.7109.629